Both Literature Reviews and Systematic Reviews Are Types of Research Evidence Reviews

- Review

- Open Access

- Published:

What are the best methodologies for rapid reviews of the research evidence for show-informed determination making in wellness policy and practice: a rapid review

Wellness Inquiry Policy and Systems volume 14, Article number:83 (2016) Cite this article

Abstract

Groundwork

Rapid reviews accept the potential to overcome a key barrier to the use of inquiry prove in decision making, namely that of the lack of timely and relevant enquiry. This rapid review of systematic reviews and main studies sought to respond the question: What are the best methodologies to enable a rapid review of research evidence for show-informed conclusion making in health policy and do?

Methods

This rapid review utilised systematic review methods and was conducted co-ordinate to a pre-defined protocol including clear inclusion criteria (PROSPERO registration: CRD42015015998). A comprehensive search strategy was used, including published and grey literature, written in English, French, Portuguese or Spanish, from 2004 onwards. Eleven databases and two websites were searched. Two review authors independently applied the eligibility criteria. Data extraction was washed by 1 reviewer and checked by a 2nd. The methodological quality of included studies was assessed independently past 2 reviewers. A narrative summary of the results is presented.

Results

Five systematic reviews and one randomised controlled trial (RCT) that investigated methodologies for rapid reviews met the inclusion criteria. None of the systematic reviews were of sufficient quality to allow house conclusions to be made. Thus, the findings need to be treated with caution. There is no agreed definition of rapid reviews in the literature and no agreed methodology for conducting rapid reviews. While a wide range of 'shortcuts' are used to brand rapid reviews faster than a full systematic review, the included studies establish little empirical bear witness of their impact on the conclusions of either rapid or systematic reviews. There is some evidence from the included RCT (that had a depression adventure of bias) that rapid reviews may better clarity and accessibility of inquiry evidence for decision makers.

Conclusions

Greater care needs to be taken in improving the transparency of the methods used in rapid review products. There is no evidence available to suggest that rapid reviews should not be done or that they are misleading in any mode. We offer an improved definition of rapid reviews to guide future inquiry as well equally clearer guidance for policy and do.

Background

In May 2005, the Earth Health Assembly called on WHO Fellow member States to "establish or strengthen mechanisms to transfer knowledge in support of testify-based public health and healthcare delivery systems, and evidence-based health-related policies" [1]. Cognition translation has been defined past WHO equally: "the synthesis, exchange, and awarding of knowledge by relevant stakeholders to advance the benefits of global and local innovation in strengthening wellness systems and improving people's health" [2]. Cognition translation seeks to address the challenges to the use of scientific show in club to close the gap between the evidence generated and decisions being made.

To achieve better translation of noesis from enquiry into policy and do information technology is important to be aware of the barriers and facilitators that influence the utilize of research prove in health policy and practice determination making [three–8]. The almost frequently reported barriers to evidence uptake are poor admission to skilful quality relevant research and lack of timely and relevant research output [7, nine]. The virtually frequently reported facilitators are collaboration between researchers and policymakers, improved relationships and skills [7], and research that accords with the beliefs, values, interests or practical goals and strategies of decision makers [10].

In relation to admission to skillful quality relevant inquiry, systematic reviews are considered the gold standard and these are used equally a basis for products such every bit practice guidelines, health engineering assessments, and show briefs for policy [11–14]. Still, there is a growing need to provide these evidence products faster and with the needs of the determination-maker in heed, while also maintaining brownie and technical quality. This should help to overcome the barrier of lack of timely and relevant enquiry, thereby facilitating their use in conclusion making. With this in mind, a range of methods for rapid reviews of the research show take been developed and put into practice [15–18]. These often include modifications to systematic review methods to make them faster than a total systematic review. Some examples of modifications that have been made include (ane) a more than targeted inquiry question/reduced scope; (2) a reduced list of sources searched, including limiting these to specialised sources (e.g. of systematic reviews, economical evaluations); (3) articles searched in the English language only; (iv) reduced timeframe of search; (5) exclusion of grey literature; (seven) use of search tools that brand it easier to observe literature; and (7) use of only i reviewer for study selection and/or information extraction. Given the emergence of this arroyo, information technology is important to develop a knowledge base regarding the implications of such 'shortcuts' on the force of evidence existence delivered to decision makers. At the fourth dimension of conducting this review, we were not aware of any high quality systematic reviews on rapid reviews and their methods.

It is important to note that a range of terms have been used to describe rapid reviews of the enquiry evidence, including prove summaries, rapid reviews, rapid syntheses, and cursory reviews, with no clear definitions [15, sixteen, eighteen–22]. In this newspaper, we take used the term 'rapid review', despite starting with the term 'rapid evidence synthesis' in our protocol, as it became clear during the conduct of our review that information technology is the most widely used term in the literature [23]. We consider a wide range of rapid reviews, including rapid reviews of effectiveness, problem definition, aetiology, diagnostic tests, and reviews of price and cost-effectiveness.

The rapid review presented in this article is part of a larger project aimed at designing a rapid response program to back up evidence-informed determination making in wellness policy and do [24]. The expectation is that a rapid response will facilitate the use of research for conclusion making. We have labelled this report as a rapid review because information technology was conducted in a limited timeframe and with the needs of health policy conclusion-makers in mind. It was deputed by policy decision-makers for their immediate utilise.

The objective of this rapid review was to apply the best bachelor evidence to answer the following research question: What are the best methodologies to enable a rapid review of enquiry evidence for evidence-informed conclusion making in health policy and practice? Both systematic reviews and main studies were included. Notation that we accept deliberately used the term 'all-time methodologies' equally it is likely that a variety of methods will exist needed depending on the research question, timeframe and needs of the decision maker.

Methods

This rapid review used systematic review methodology and adheres to the Preferred Reporting Items for Systematic Reviews and Meta-Analysis argument [25]. A systematic review protocol was written and registered prior to undertaking the searches [26]. Deviations from the protocol are listed in Additional file 1.

Inclusion criteria for studies

Studies were selected based on the inclusion criteria stated beneath.

Types of studies

Both systematic reviews and primary studies were sought. For inclusion, priority was given to systematic reviews and to primary studies that used one of the following designs: (ane) individual or cluster randomised controlled trials (RCTs) and quasi-randomised controlled trials; (two) controlled before and subsequently studies where participants are allocated to command and intervention groups using non-randomised methods; (iii) interrupted time series with before and after measurements (and preferably with at least three measures); and (4) cost-effectiveness/toll-utility/cost-benefit. Other types of studies were also identified for consideration for inclusion in example no systematic reviews and few primary studies with potent study designs (as indicated higher up in one–4) could be constitute. They were initially selected provided that they described some type of evaluation of methodologies for rapid reviews.

Types of participants

Apart from needing to exist within the field of health policy and exercise, the types of participants were not restricted, and the level of analysis could be at the level of the individual, organisation, arrangement or geographical area. During the written report selection process, nosotros fabricated a decision to also include 'products', i.due east. papers that include rapid reviews as the unit of inclusion rather than people.

Types of manufactures/interventions

Studies that evaluated methodologies or approaches to rapid reviews for wellness policy and/or practice, including systematic reviews, exercise guidelines, technology assessments, and prove briefs for policy, were included.

Types of comparisons

Suitable comparisons (where relevant to the article blazon) included no intervention, some other intervention, or current practise.

Types of outcome measures

Relevant outcome measures included time to complete; resources required to complete (e.g. cost, personnel); measures of synthesis quality; measures of efficiency of methods (measures that combine aspects of quality with time to complete, eastward.one thousand. limiting data extraction to cardinal characteristics and results that may reduce the time needed to complete without impacting on review quality); satisfaction with methods and products; and implementation. During the study selection process the authors agreed to include two additional outcomes that were not in the published protocol but important for the review, namely comparison of findings between the dissimilar synthesis methods (e.thousand. rapid vs. systematic review) and toll-effectiveness.

Publications in English language, French, Portuguese or Castilian, from any country and published from 2004 onwards were included. The year 2004 was chosen equally this is the year of the Mexico Ministerial Summit on Health Enquiry, where the know-do gap was first given serious attention by health ministers [27]. Both grey and peer-reviewed literature was sought and included.

Search methods for identification of studies

A comprehensive search of 11 databases and two websites was conducted. The databases searched were CINAHL, the Cochrane Library (including Cochrane Reviews, the Database of Abstracts of Reviews of Effectiveness, the Health Technology Cess database, NHS Economic Evaluation Database, and the database of Methods Studies), EconLit, EMBASE, Health Systems Show, LILACS and Medline. The websites searched were Google and Google Scholar.

Grey literature and transmission search

Some of the selected databases index a combination of published and unpublished studies (for example, doctoral dissertations, conference abstracts and unpublished reports); therefore, unpublished studies were partially captured through the electronic search process. In addition, Google and Google Scholar were searched. The authors' own databases of knowledge translation literature were likewise searched by hand for relevant studies. The reference list of each included study was searched. Contact was made with 9 key authors and experts in the area for further studies, of whom five responded.

Search strategy

Searches were conducted between 15th Jan and 3rd February 2015 and supplementary searches (reference lists, contact with authors) were conducted in May 2015. Databases were searched using the keywords: "rapid literature review*" OR "rapid systematic review*" or "rapid scoping review*" OR "rapid review*" OR "rapid approach*" OR "rapid synthesis" OR "rapid syntheses" OR "rapid evidence assess*" OR "show summar*" OR "realist review*" OR "realist synthesis" OR "realist syntheses" OR "realist evaluation" OR "meta-method*" OR "meta method*" OR "realist approach*" OR "meta-evaluation*" OR "meta evaluation*". Keywords were searched for in title and abstract, except where otherwise stated in Additional file 2. Results were downloaded into the EndNote reference management program (version X7) and duplicates removed. The Internet search utilised the search terms: "rapid review"; "rapid systematic review"; "realist review"; "rapid synthesis"; and "rapid evidence".

Screening and option of studies

Searches were conducted and screened according to the selection criteria past i review author (MH). The full text of any potentially relevant papers was retrieved for closer examination. This reviewer erred on the side of inclusion where there was any doubt almost its inclusion to ensure no potentially relevant papers were missed. The inclusion criteria were then practical against the full text version of the papers (where available) independently by 2 reviewers (MH and RC). For studies in Portuguese and Spanish, other authors (EC, LR or JB) played the role of 2d reviewer. Disagreements regarding eligibility of studies were resolved by give-and-take and consensus. Where the two reviewers were nonetheless uncertain about inclusion, the other reviewers (EC, LR, JB, JL) were asked to provide input to achieve consensus. All studies which initially appeared to meet the inclusion criteria, but on inspection of the full text paper did not, were detailed in a table 'Characteristics of excluded systematic reviews,' together with reasons for their exclusion.

Application of the inclusion criteria by the two reviewers was performed as follows. Showtime, all studies that met the inclusion criteria for participants, interventions and outcomes were selected, providing that they described some type of evaluation of methodologies for rapid evidence synthesis. At this phase, the study type was assessed and categorised by the two reviewers equally being a (i) systematic review; (2) primary study with a potent study design, i.e. of i of the 4 types identified above; or (3) 'other' study design (that provided some type of evaluation of methodologies for rapid evidence synthesis). The reason for this was to enable the reviewers to make a determination equally to which written report designs should be included (based on available evidence, information technology was not known if sufficient evidence would be found if just systematic reviews and primary studies with stiff study designs were included from the outset) and because of interest from the funders in other study types. Following discussion between all co-authors information technology was decided that information technology was likely that sufficient evidence could be provided from the first two categories of study type. Thus, the third group was excluded from information extraction only are listed in Boosted file 3.

Data extraction

Information extracted from studies and reviewed included objectives, target population, method/s tested, outcomes reported, country of study/studies and results. For systematic reviews we as well extracted the appointment of last search, the included study designs and the number of studies. For primary studies, nosotros also extracted the twelvemonth of report, the study pattern and the population size. Data extraction was performed past one reviewer (MH) and checked by a second reviewer (RC). Disagreements were resolved through word and consensus.

Assessment of methodological quality

The methodological quality of included studies was assessed independently past two reviewers using AMSTAR: A MeaSurement Tool to Assess Reviews [28] for systematic reviews and the Cochrane Risk of Bias Tool for RCTs [29]. Disagreements in scoring were resolved by word and consensus. For this review, systematic reviews that achieved AMSTAR scores of 8 to 11 were considered high quality; scores of 4 to seven medium quality; and scores of 0 to three low quality. These cut-offs are ordinarily used in Cochrane Collaboration overviews. The study quality assessment was used to translate their results when synthesised in this review and in the formulation of conclusions.

Data analysis

Findings from the included publications were synthesised using tables and a narrative summary. Meta-assay was non possible considering the included studies were heterogeneous in terms of the populations, methods and outcomes tested.

Results

Search results

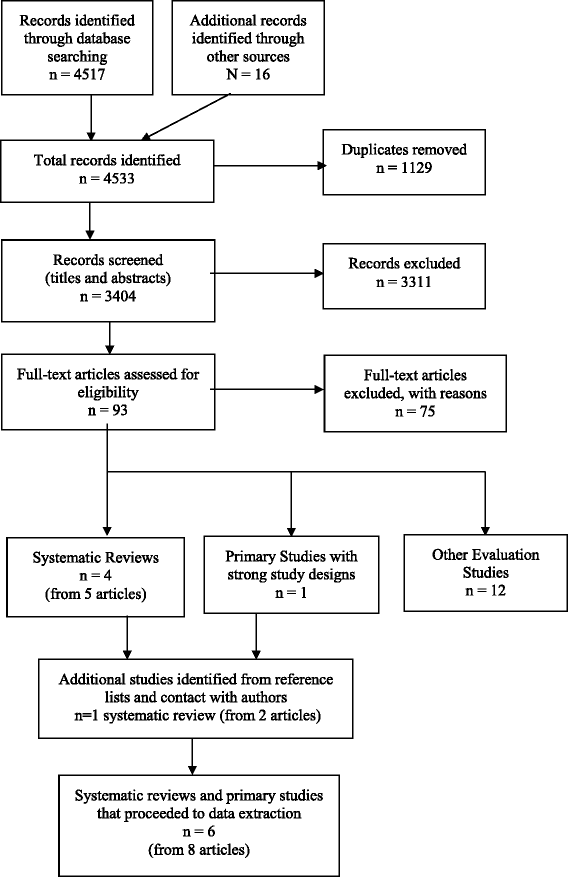

Five systematic reviews (from vii manufactures) [18, 19, 21, 30–33] and one chief report with a strong report design – a RCT [34] – met the inclusion criteria for the review. The selection process for studies and the numbers at each stage are shown in Fig. 1. The reasons for exclusion of the 75 papers at full text stage are shown in Boosted file 3. The 12 evaluation studies excluded from data extraction due to weak written report designs are also listed at the end of Additional file iii.

Study selection menstruation nautical chart – Methods for rapid reviews

Characteristics of included studies and quality assessment

Characteristics of the included systematic reviews are summarised in Table ane, with full details provided in Additional file iv. All rapid reviews were targeted at healthcare decision makers and/or agencies conducting rapid reviews (including rapid health technology assessments). Just two of the systematic reviews offered a definition of "rapid review" to guide their reviews [xix, 30, 33]. Three of the systematic reviews obtained samples of rapid review products – though not necessarily randomly – and examined aspects of the methods used in their product [18, xix, 21]. Three of the systematic reviews reviewed articles on rapid review methods [xviii, 30, 32]. Two of these also included a comparison of findings from rapid reviews and systematic reviews conducted for the same topic [18, 32].

None of the systematic reviews that were identified examined the outcomes of resources required to consummate, synthesis quality, efficiency of methods, satisfaction with methods and products, implementation, or cost-effectiveness. All the same, while not explicitly assessing synthesis/review quality, all of the reviews did study the methods used to conduct the rapid reviews. We have reported these details equally they give an indication of the quality of the review. Therefore, the outcomes reported in the included systematic reviews and recorded in Tabular array 1 and Additional file iv exercise non align perfectly with those proposed in our inclusion criteria. In addition, we have included some data that was not pre-defined but for which we extracted information because it provided important contextual data, e.g. type of product, definition, rapid review initiation and rationale, nomenclature, and content. The reporting of the results was likewise further complicated by the use of a narrative, rather than a quantitative, synthesis of the results in the included studies.

It is not possible to say how many unique studies are included in these systematic reviews because just i review actually included a list of included studies [30] and one a characteristics of included studies table (only not in a form that was easy to use) [21]. However, it is articulate that there is likely to exist pregnant overlap in studies betwixt reviews. For instance, the near recent systematic review by Hartling et al. [31, 32] as well included the iv previous systematic reviews included in this rapid review [xviii, 19, 21, 30, 33].

The RCT was targeted at healthcare professionals involved in clinical guideline development [34]. Information technology aimed to assess the effectiveness of unlike evidence summary formats for employ in clinical guideline evolution. Three different packs were tested – pack A: a systematic review solitary; pack B: a systematic review with summary-of-findings tables included; and pack C: an evidence synthesis and systematic review. Pack C is described by the authors of the study as: "a locally prepared, short, contextually framed, narrative report in which the results of the systematic review (and other evidence where relevant) were described and locally relevant factors that could influence the implementation of bear witness-based guideline recommendations (east.g. resources capacity) were highlighted" [34]. Nosotros interpreted pack C as being a 'rapid review' for the purposes of this review as the authors country that it is based on a comprehensive search and critical appraisal of the all-time currently bachelor literature, which included a Cochrane review, an overview of systematic reviews and RCTs, and boosted RCTs but was probable to have been done in a short timeframe. It was also conducted to aid improve controlling. The primary outcome measured was the proportion of correct responses to key clinical questions, whilst the secondary upshot was a composite score comprised of clarity of presentation and ease of locating the quality of prove [34]. This study was not included in whatever previous systematic reviews.

Four of the systematic reviews obtained AMSTAR scores of 2 (low quality) and one a score of four (medium quality). No high quality systematic reviews were found. Thus, the findings of the systematic reviews should be taken as indicative simply and no firm conclusions tin can exist made. The RCT was classified as depression take chances of bias on the Cochrane Risk of Bias tool. The quality assessments tin can be found in Boosted file v.

Findings

Definition of a 'rapid review'

The five systematic reviews are consequent in stating that there is no agreed definition of rapid reviews and no agreed methodology for conducting rapid reviews [18, xix, 21, thirty–33]. According to the authors of 1 review: "the term 'rapid review' does not announced to have one single definition just is framed in the literature every bit utilizing various stipulated time frames between 1 and 6 months" [21, p. 398]. The definitions offered to guide the reviews by Abrami et al. [19] and Cameron et al. [xxx] both utilize a timeframe of upwardly to half-dozen months (Table 1). Cameron et al. [30] also include in their definition the requirement that the review contains the elements of a comprehensive search – though they do not offer criteria to assess this.

Abrami et al. [19] use the term 'brief review' rather than 'rapid review' to emphasise that both timeframe and telescopic may exist affected. They write that "a brief review is an test of empirical show that is express in its timeframe (e.chiliad. six months or less to complete) and/or its telescopic, where telescopic may include:

-

the breadth of the question being explored (e.chiliad. a review of ane-to-one laptop programs versus a review of technology integration in schools);

-

the timeliness of the evidence included (east.k. the concluding several years of research versus no time limits);

-

the geographic boundaries of the evidence (e.g. inclusion of regional or national studies just versus international evidence);

-

the depth and detail of analyses (due east.g. reporting just overall findings versus likewise exploring variability among the findings); or

-

otherwise more than restrictive study inclusion criteria than might be seen in a comprehensive review." [nineteen, p. 372].

All other included systematic reviews used the term 'rapid review' or 'rapid health technology assessment' to draw rapid reviews.

Methods used based on examples of rapid reviews

While the discussion 'rapid' indicates that it will exist carried out speedily, there is no consistency in published rapid reviews as to how it is made rapid and which part, or parts, of the review are carried out at a faster footstep than a full systematic review [18, 19, 21]. A further complexity is the reporting of methods used in the rapid review, with virtually 43% of the rapid reviews examined by Abrami et al. [19] not describing their methodology comprehensively. Three examples of 'shortcuts' taken are (ane) not using 2 reviewers for written report pick and/or data extraction; (ii) not conducting a quality cess of included studies; and (3) not searching for greyness literature [18, xix, 21]. However, it is of import to notation that the rapid reviews examined in these three systematic reviews were not necessarily selected randomly and, thus, it is not possible to accurately quantify the proportion of rapid reviews taking various 'shortcuts' and which 'shortcuts' are the most common. The fourth dimension taken for the reviews examined varied from several days to one year [19]; 3 weeks to 6 months [18]; and vii–12 (mean 10.42, SD 7.one) months [21].

Methods used based on studies of rapid review methods

Methodological approaches or 'shortcuts' used in rapid reviews to make them faster than a full systematic review include [18, nineteen, 32] limiting the number of questions; limiting the telescopic of questions; searching fewer databases; express use of grey literature; restricting the types of studies included (e.chiliad. English but, most recent v years); relying on existing systematic reviews; eliminating or limiting hand searching of reference lists and relevant journals; narrow time frame for article retrieval; using non-iterative search strategy; eliminating consultation with experts; limiting total-text review; limiting dual review for study selection, data extraction and/or quality assessment; limiting data extraction; limiting chance of bias cess or grading; minimal evidence synthesis; providing minimal conclusions or recommendations; and limiting external peer review. Harker et al. [21] found that, with increasing timeframes, fewer of the 'shortcuts' were used and that, with longer timeframes, it was more probable that hazard of bias assessment, bear witness grading and external peer review would be conducted [21].

None of the included systematic reviews offer firm guidelines for the methodology underpinning rapid reviews. Rather, they report that many manufactures written well-nigh rapid reviews offer only examples and discussion surrounding the complexity of the surface area [30].

Supporting evidence for shortcuts

While authors of the included systematic reviews tend to concord that changes to scope or timeframe can introduce biases (due east.grand. pick bias, publication bias, linguistic communication of publication bias) they found little empirical evidence to support or abnegate that merits [18, 19, 21, thirty, 32].

The review past Ganann et al. [xviii] included 45 methodological studies that considered issues such as the impact of limiting the number of databases searched, paw searching of reference lists and relevant journals, omitting grayness literature, merely including studies published in English, and omitting quality assessment. Notwithstanding, they were unable to provide clear methodological guidelines based on the findings of these studies.

Comparison of findings – rapid reviews versus systematic reviews

A key question is whether the conclusions of a rapid review are fundamentally different to a full systematic review, i.eastward. whether they are sufficiently different to change the resulting conclusion. This is an expanse where the inquiry is extremely limited. There are few comparisons of full and rapid reviews that are available in the literature to be able to determine the impact of the higher up methodological changes – only 2 chief studies were reported in the included systematic reviews [35, 36]. It is important to note that neither of these studies met, on their own, the inclusion criteria for the review in that they did non have a sufficiently strong written report blueprint. Both are included in the list of 12 studies excluded from data extraction (Fig. ane and Boosted file 3). Thus, they provide a very low level of bear witness.

One of the primary studies compared total and rapid reviews on the topics of drug eluting stents, lung volume reduction surgery, living donor liver transplantation and hip resurfacing [xxx, 36]. There were no instances in which the essential conclusions of the rapid and total reviews were opposed [32]. The other compared a rapid review with a full systematic review on the use of potato peels for burns [35]. The results and conclusions of the 2 reports were unlike. The authors of the rapid review advise that this is because the systematic review was not of sufficiently good quality – as they missed 2 important trials in their search [35]. Withal, the limited detail on the methods used to conduct the systematic review makes this case written report of express value. Further research is needed in this area.

Impact of rapid syntheses on understanding of decision makers

The included RCT by Opiyo et al. [34] examined the touch of different evidence summary or synthesis formats on knowledge of the prove, with each participant receiving a pack containing 3 different summaries; they found no differences between packs in the odds of correct responses to key clinical questions. Pack C (the rapid review) was associated with a higher mean composite score for clarity and accessibility of information well-nigh the quality of evidence for critical neonatal outcomes compared to systematic reviews lone (pack A) (adapted hateful divergence 0.52, 95% confidence interval, 0.06–0.99). Findings from interviews with 16 panellists indicated that short narrative bear witness reports (pack C) were preferred for the improved clarity of information presentation and ease of use. The authors ended that their "findings advise that 'graded-entry' evidence summary formats may amend clarity and accessibility of research evidence in clinical guideline development" [34, p. 1].

Discussion

This review is the first high quality review (using systematic reviews equally the golden standard for literature reviews) published in the literature that provides a comprehensive overview of the state of the rapid review literature. It highlights the lack of definition, lack of defined methods and lack of enquiry show showing the implications of methodological choices on the results of both rapid reviews and systematic reviews. Information technology besides adds to the literature by offering clearer guidance for policy and practice than has been offered in previous reviews (see Implications for policy and practice).

While five systematic reviews of methods for rapid reviews were constitute, none of these were of sufficient quality to allow house conclusions to exist made. Thus, the findings need to be treated with caution. There is no agreed definition of rapid reviews in the literature and no agreed methodology for conducting rapid reviews [18, 19, 21, thirty–33]. However, the systematic reviews included in this review are consistent in stating that a rapid review is by and large conducted in a shorter timeframe and may have a reduced scope. A wide range of 'shortcuts' are used to make rapid reviews faster than a full systematic review. While authors of the included systematic reviews tend to agree that changes to telescopic or timeframe can introduce biases (due east.g. selection bias, publication bias, language of publication bias) they found little empirical testify to support or abnegate that claim [18, 19, 21, thirty, 32]. Further, there are few comparisons available in the literature of full and rapid reviews to be able to determine the affect of these 'shortcuts'. At that place is some evidence from a adept quality RCT with low take a chance of bias that rapid reviews may improve clarity and accessibility of research prove for decision makers [34], which is a unique finding from our review.

A scoping review published after our search found over 20 unlike names for rapid reviews, with the nearly frequent term being 'rapid review', followed past 'rapid evidence assessment' and 'rapid systematic review' [23]. An associated international survey of rapid review producers and modified Delphi approach counted 31 dissimilar names [37]. With regards to rapid review methods and definitions, the scoping review constitute 50 unique methods, with sixteen methods occurring more than than in one case [23]. For their scoping review and international survey, Tricco et al. utilised the working definition: "a rapid review is a blazon of knowledge synthesis in which components of the systematic review procedure are simplified or omitted to produce data in a brusque period of fourth dimension" [23, 37].

The authors of the near contempo systematic review of rapid review methods suggest that: "the similarity of rapid products lies in their close human relationship with the stop-user to run across decision making needs in a express timeframe" [32, p. seven]. They suggest that this feature drives other differences, including the big range of products often produced past rapid response groups, and the wide variation in methods used [32] – even inside the same production type produced by the same grouping. We propose that this characteristic of rapid reviews needs to be part of the definition and considered in future research on rapid reviews, including whether it actually leads to improve uptake of research. To aid futurity research, we propose the following definition: a rapid review is a blazon of systematic review in which components of the systematic review procedure are simplified, omitted or fabricated more efficient in social club to produce information in a shorter period of time, preferably with minimal impact on quality. Farther, they involve a close relationship with the end-user and are conducted with the needs of the determination-maker in mind.

When comparison rapid reviews to systematic reviews, the confounding effects of quality of the methods used must be considered. If rapid syntheses of research are seen every bit systematic reviews performed faster and if systematic reviews are seen as the gilt standard for evidence synthesis, the quality of the review is likely to depend on which 'shortcuts' were taken and this tin can be assessed using available quality measures, e.g. AMSTAR [28]. While Cochrane Collaboration systematic reviews are consistently of a very high quality (achieving 10 or xi on the AMSTAR scale, based on our ain experience) the same cannot be said for all systematic reviews that can be found in the published literature or in databases of systematic reviews – every bit is demonstrated by this review where AMSTAR scores were quite low (Additional file 5) and a related overview where AMSTAR scores varied between two and 10 [24, Boosted file one]. This fact has non been acknowledged in previous syntheses of the rapid review literature. It is besides possible for rapid reviews to reach high AMSTAR scores if conducted and reported well. Therefore, it can be easily argued that a high quality rapid review is probable to provide an respond closer to the 'truth' than a systematic review of low quality. It is as well an argument for using the same tool for assessing the quality of both systematic and rapid reviews.

Authors of the published systematic reviews of rapid reviews propose that, rather than focusing on developing a formalised methodology, which may not exist appropriate, researchers and users should focus on increasing the transparency of the methods used for each review [xviii, xxx, 33]. Indeed, several AMSTAR criteria are highly dependent on the transparency of the write-up rather than the methodology itself. For example, in that location are many examples of both systematic and rapid review authors non stating that they used a protocol for their review when, in fact, they did use one, leading to a loss of 1 point on the AMSTAR scale. Some other example is review authors failing to provide an adequate description of the report selection and data extraction process, thus making information technology hard for those assessing the quality of the review to determine if this was done in duplicate, which is once more a loss of one signal on the AMSTAR scale.

While information technology could be argued that none of the included reviews described their review as a systematic review, we believe that it is advisable to assess their quality using the AMSTAR tool. This the all-time tool available, to our knowledge, to appraise and compare the quality of review methods and considers the major potential sources for bias in reviews of the literature [28, 38]. Further, the v reviews included were clearly not narrative reviews every bit each described their methods, including sources of studies, search terms and inclusion criteria used.

Strengths and limitations

A key strength of this rapid review is the utilise of high quality systematic review methodology, including the consideration of the scientific quality of the included studies in formulating conclusions. A meta-analysis was not possible due to the heterogeneity in terms of intervention types and populations studied in the included systematic reviews. As a issue publication bias could non be assessed quantitatively in this review and no articulate methods are bachelor for assessing publication bias qualitatively [39]. Shortcuts taken to make this review more rapid, as well as an AMSTAR assessment of the review, are shown in Boosted file half-dozen. The AMSTAR assessment is based on the published tool [28] and additional guidance provided on the AMSTAR website (http://amstar.ca/Amstar_Checklist.php).

The current rapid review is prove that a review tin include several shortcuts and be produced in a relatively short amount of time without sacrificing quality, as shown by the high AMSTAR score (Additional file 6). The time taken to complete this review was 7 months from signing of contract (Nov 2014) to submission of the final study to the funder (June 2015). Alternatively, if publication of the protocol on PROSPERO and the starting time of literature searching (January 2014) are taken as the starting point, the time taken was 5 months.

Limitations of this review include (ane) the low quality of the systematic reviews institute, with three of the four included systematic reviews judged equally low quality on the AMSTAR criteria and the fourth but making it to medium quality (Additional file five); (2) the fact that few primary studies were conducted in developing countries, which is an issue for the generalisability of the results; and (3) restricting the search to articles in English, French, Castilian or Portuguese (languages with which the review authors are competent) and to the terminal ten years. Nevertheless, this was done to expedite the review process and is unlikely to have resulted in the loss of important prove.

Implications for policy and practise

Users of rapid reviews should request an AMSTAR rating and a clear indication of the shortcuts taken to make the review process faster. Producers of rapid reviews should give greater consideration to the 'write-up' or presentation of their reviews to make their review methods more than transparent and to enable a fair quality cess. This could exist facilitated past including the appropriate elements in templates and/or guidelines. If a shorter report is required, the necessary item could be placed in appendices.

When deciding what methods and/or process to use for their rapid reviews, producers of rapid reviews should give priority to shortcuts that are unlikely to touch on the quality or risk of bias of the review. Examples include limiting the scope of the review [19], limiting data extraction to central characteristics and results [32], and restricting the report types included in the review [32]. When planning the rapid review, the review producer should explicate to the user the implications of any shortcuts taken to make the review faster, if any.

Producers of rapid reviews should consider maintaining a larger highly skilled and experienced staff, who can be mobilised quickly, and understands the type of products that might meet the needs of the decision maker [19, 32]. Consideration should as well be given to making the procedure more efficient [nineteen]. These measures can aid timelines without compromising quality.

Implications for research

The impact on the results of rapid reviews (and systematic reviews) of any 'shortcuts' used requires further research, including which 'shortcuts' take the greatest impact on the review findings. Tricco et al. [23, 37] suggest that this could be examined through a prospective study that compares the results of rapid reviews to those obtained through systematic reviews on the same topic. However, to do this, information technology will exist important to consider quality as a confounding factor and ensure random selection and blinding of the rapid review producers. If random choice and blinding cannot be guaranteed, we advise that retrospective comparisons may be more appropriate. Another, related approach, would be to compare findings of reviews (be they systematic or rapid) for each type of shortcut, controlling for methodological quality. Other problems, such as the breadth of the inclusion criteria used and number of studies included would likewise need to be considered every bit possible confounding factors.

The development of reporting guidelines for rapid reviews, as are available for full systematic reviews, would also assist [18, 25]. These should be heavily based on systematic review guidelines but also consider characteristics specific to rapid reviews such every bit the relationship with the review user.

Finally, hereafter studies and reviews should besides address the outcomes of review quality, satisfaction with methods and products, implementation and price-effectiveness every bit these outcomes were not measured in any of the included studies or reviews. Effectiveness of rapid reviews in increasing the use of research show in policy decision-making is likewise an important area for farther research.

Conclusions

Care needs to be taken in interpreting the results of this rapid review on the best methodologies for rapid review given the limited state of the literature. At that place is a wide range of methods currently used for rapid reviews and wide range of products available. However, greater care needs to be taken in improving the transparency of the methods used in rapid review products to enable better analysis of the implications of methodological 'shortcuts' taken for both rapid reviews and systematic reviews. This requires the input of policymakers and practitioners, as well as researchers. In that location is no evidence available to advise that rapid reviews should not be washed or that they are misleading in whatsoever style.

Abbreviations

- AMSTAR:

-

A MeaSurement Tool to Assess Reviews

- PAHO:

-

Pan American Health Arrangement

- PRISMA:

-

Preferred reporting items for systematic reviews and meta-analysis

- RCT:

-

Randomised controlled trial

References

-

Earth Health Assembly. Resolution on Health Research. 2005. http://world wide web.who.int/rpc/meetings/58th_WHA_resolution.pdf. Accessed 17 Nov 2016.

-

World Health Organization. Bridging the "Know–Do" Gap: Meeting on Knowledge Translation in Global Wellness, 10–12 October 2005. 2006 Contract No. WHO/EIP/KMS/2006.2. Geneva: WHO; 2006.

-

Lavis J, Davies H, Oxman A, Denis JL, Golden-Biddle K, Ferlie Due east. Towards systematic reviews that inform health intendance management and policy-making. J Health Serv Res Policy. 2005;10 Suppl 1:35–48.

-

Liverani G, Hawkins B, Parkhurst JO. Political and institutional influences on the use of testify in public health policy. A systematic review. PLoS 1. 2013;8:e77404.

-

Moore G, Redman S, Haines A, Todd A. What works to increase the use of research in population health policy and programmes: a review. Evid Policy A J Res Debate Pract. 2011;7:277–305.

-

Nutley S. Bridging the policy/research separate. Reflections and lessons from the UK. Keynote paper presented at "Facing the future: Engaging stakeholders and citizens in developing public policy". Canberra: National Plant of Governance Briefing; 2003. http://world wide web.treasury.govt.nz/publications/media-speeches/guestlectures/nutley-apr03. Accessed 17 Nov 2016.

-

Oliver K, Innvar Due south, Lorenc T, Woodman J, Thomas J. A systematic review of barriers to and facilitators of the use of bear witness by policymakers. BMC Health Serv Res. 2014;xiv:ii.

-

Orton Fifty, Lloyd-Williams F, Taylor-Robinson D, O'Flaherty M, Capewell S. The utilise of enquiry testify in public health conclusion making processes: systematic review. PLoS ONE. 2011;six:e21704.

-

World Health Organization. Knowledge Translation Framework for Ageing and Wellness. Geneva: Department of Ageing and Life-Grade, WHO; 2012.

-

Lavis JN, Hammill AC, Gildiner A, McDonagh RJ, Wilson MG, Ross SE, et al. A systematic review of the factors that influence the use of research bear witness past public policymakers. Final report submitted to the Canadian Population Wellness Initiative. Hamilton: McMaster University Program in Policy Decision-Making; 2005.

-

Chalmers I. If evidence-informed policy works in do, does it matter if information technology doesn't work in theory? Evid Policy A J Res Contend Pract. 2005;one:227–42.

-

Lavis JN, Permanand G, Oxman AD, Lewin S, Fretheim A. SUPPORT Tools for evidence-informed wellness Policymaking (STP) 13: Preparing and using policy briefs to back up testify-informed policymaking. Health Res Pol Syst. 2009;7 Suppl 1:S13.

-

Stephens JM, Handke B, Doshi JA, On behalf of the HTA Principles Working Group - part of the International Gild for Pharmacoeconomics and Outcomes Research (ISPOR) HTA Special Involvement Group (SIG). International survey of methods used in wellness engineering assessment (HTA): does exercise run into the principles proposed for good inquiry? Comp Effectivness Res. 2012;2:29–44.

-

World Health Organization. WHO Handbook for Guideline Development. 2nd ed. Geneva: WHO; 2014.

-

Khangura S, Konnyu K, Cushman R, Grimshaw J, Moher D. Evidence summaries: the evolution of a rapid review approach. Syst Rev. 2012;1:10.

-

Khangura South, Polisena J, Clifford TJ, Farrah One thousand, Kamel C. Rapid review: An emerging approach to evidence synthesis in wellness applied science assessment. Int J Technol Appraise Health Care. 2014;30:twenty–seven.

-

Polisena J, Garrity C, Kamel C, Stevens A, Abou-Setta AM. Rapid review programs to support health care and policy decision making: a descriptive analysis of processes and methods. Syst Rev. 2015;4:26.

-

Ganann R, Ciliska D, Thomas H. Expediting systematic reviews: methods and implications of rapid reviews. Implement Sci. 2010;5:56.

-

Abrami PC, Borokhovski E, Bernard RM, Wade CA, Tamim R, Persson T, et al. Problems in conducting and disseminating brief reviews of evidence. Evid Policy A J Res Debate Pract. 2010;6:371–89.

-

Gough D, Thomas J, Oliver S. Clarifying differences betwixt review designs and methods. Syst Rev. 2012;1:28.

-

Harker J, Kleijnen J. What is a rapid review? A methodological exploration of rapid reviews in Wellness Engineering science Assessments. Int J Evid Based Healthc. 2012;10:397–410.

-

Wilson MG, Lavis JN, Gauvin FP. Developing a rapid-response program for health system decision-makers in Canada: findings from an upshot brief and stakeholder dialogue. Syst Rev. 2015;4:25.

-

Tricco AC, Antony J, Zarin Westward, Strifler 50, Ghassemi 1000, Ivory J, et al. A scoping review of rapid review methods. BMC Med. 2015;13:224.

-

Haby MM, Chapman E, Clark R, Barreto J, Reveiz Fifty, Lavis JN. Designing a rapid response programme to support evidence-informed decision making in the Americas Region: using the all-time available evidence and case studies. Implement Sci. 2016;eleven:117.

-

Moher D, Liberati A, Tetzlaff J, Altman DG, Group P. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 2009;6:e1000097.

-

Haby M, Chapman E, Reveiz L, Barreto J, Clark R. Methodologies for rapid response for evidence-informed determination making in wellness policy and practice: an overview of systematic reviews and master studies (Protocol). PROSPERO: CRD42015015998. 2015. http://world wide web.crd.york.ac.u.k./PROSPEROFILES/15998_PROTOCOL_20150016.pdf.

-

World Health Organization. Report from the Ministerial Summit on Health Inquiry: Identify Challenges, Inform Actions, Correct Inequities. Mexico City: WHO; 2005. http://www.who.int/rpc/summit. Accessed 17 Nov 2016.

-

Shea BJ, Grimshaw JM, Wells GA, Boers G, Andersson N, Hamel C, et al. Evolution of AMSTAR: a measurement tool to appraise the methodological quality of systematic reviews. BMC Med Res Methodol. 2007;7:10.

-

Higgins JPT, Green S, editors. Cochrane Handbook for Systematic Reviews of Interventions Version 5.1.0 [updated March 2011]. The Cochrane Collaboration; 2011. http://handbook.cochrane.org/. Accessed 17 Nov 2016.

-

Cameron A, Watt A, Lathlean T, Sturm L. Rapid versus full systematic reviews: an inventory of current methods and practice in Health Engineering Assessment. ASERNIP-S Report No. lx. Adelaide: ASERNIP-Southward, Imperial Australasian College of Surgeons; 2007.

-

Featherstone RM, Dryden DM, Foisy M, Guise JM, Mitchell Doc, Paynter RA, et al. Advancing knowledge of rapid reviews: an analysis of results, conclusions and recommendations from published review articles examining rapid reviews. Syst Rev. 2015;iv:fifty.

-

Hartling L, Guise JM, Kato Eastward, Anderson J, Aronson North, Belinson S, et al. EPC Methods: An Exploration of Methods and Context for the Product of Rapid Reviews. Inquiry White Paper. Prepared by the Scientific Resource Center under Contract No. 290-2012-00004-C. AHRQ Publication No. fifteen-EHC008-EF. Rockville, Medico: Agency for Healthcare Research and Quality; 2015.

-

Watt A, Cameron A, Sturm L, Lathlean T, Babidge W, Blamey South, et al. Rapid reviews versus total systematic reviews: an inventory of electric current methods and practice in health technology assessment. Int J Technol Appraise Health Care. 2008;24:133–9.

-

Opiyo N, Shepperd S, Musila Due north, Allen Due east, Nyamai R, Fretheim A, et al. Comparison of alternative evidence summary and presentation formats in clinical guideline development: a mixed-method written report. PLoS ONE. 2013;8:e55067.

-

Van de Velde Due south, De Buck Eastward, Dieltjens T, Aertgeerts B. Medicinal use of potato-derived products: conclusions of a rapid versus full systematic review. Phytother Res. 2011;25:787–8.

-

Watt A, Cameron A, Sturm L, Lathlean T, Babidge Due west, Blamey S, et al. Rapid versus total systematic reviews: validity in clinical practice? Aust N Z J Surg. 2008;78:1037–40.

-

Tricco AC, Zarin Westward, Antony J, Hutton B, Moher D, Sherifali D, et al. An international survey and modified Delphi approach revealed numerous rapid review methods. J Clin Epidemiol. 2015;70:61–7.

-

Shea BJ, Hamel C, Wells GA, Bouter LM, Kristjansson Eastward, Grimshaw J, et al. AMSTAR is a reliable and valid measurement tool to appraise the methodological quality of systematic reviews. J Clin Epidemiol. 2009;62:1013–20.

-

Vocal F, Parekh S, Hooper 50, Loke YK, Ryder J. Dissemination and publication of inquiry findings: an updated review of related biases. Wellness Technol Assess. 2010;fourteen(8):3. ix–xi, 1–193.

Acknowledgements

We thank the authors of included studies and other experts in the field who responded to our asking to identify further studies that could meet our inclusion criteria and/or responded to our queries regarding their report.

Funding

This piece of work was developed and funded nether the cooperation agreement # 47 between the Department of Science and Applied science of the Ministry of Wellness of Brazil and the Pan American Health Organization. The funders of this study set the terms of reference for the projection but, apart from the input of JB, EC and LR to the conduct of the study, did not significantly influence the piece of work. Manuscript grooming was funded past the Ministry of Health Brazil, through an EVIPNet Brazil project with the Bireme/PAHO.

Availability of data and materials

The datasets supporting the conclusions of this article are included within the article and its boosted files.

Authors' contributions

EC and JB had the original idea for the review and obtained funding; MH and EC wrote the protocol with input from RC, JB, and LR; MH and RC undertook the article selection, data extraction and quality cess; MH undertook information synthesis and drafted the manuscript; JL, EC, RC, JB and LR provided guidance throughout the selection, data extraction and synthesis phase of the review; all authors provided commentary on and approved the terminal manuscript.

Competing interests

The author(due south) declare that they have no competing interests. Neither the Ministry of Health of Brazil nor the Pan American Health Organization (PAHO), the funders of this enquiry, accept a vested involvement in any of the interventions included in this review – though they exercise take a professional interest in increasing the uptake of enquiry evidence in decision making. EC and LR are employees of PAHO and JB was an employee of the Ministry building of Health of Brazil at the time of the written report. Notwithstanding, the views and opinions expressed herein are those of the review authors and do not necessarily reverberate the views of the Ministry of Wellness of Brazil or PAHO. JNL is involved in a rapid response service only was not involved in the selection or data extraction phases. MH, as part of her previous employment with an Australian state authorities department of health, was responsible for commissioning and using rapid reviews to inform determination making.

Consent for publication

Not applicable.

Ethics approval and consent to participate

Not applicable.

Author information

Affiliations

Corresponding author

Additional files

Rights and permissions

Open up Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/past/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided y'all give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Artistic Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the information made available in this article, unless otherwise stated.

Reprints and Permissions

About this article

Cite this commodity

Haby, M.M., Chapman, E., Clark, R. et al. What are the best methodologies for rapid reviews of the research evidence for bear witness-informed decision making in health policy and exercise: a rapid review. Wellness Res Policy Sys fourteen, 83 (2016). https://doi.org/ten.1186/s12961-016-0155-7

-

Received:

-

Accepted:

-

Published:

-

DOI : https://doi.org/ten.1186/s12961-016-0155-seven

Keywords

- Rapid reviews

- Noesis translation

- Evidence-informed controlling

- Research uptake

- Health policy

Source: https://health-policy-systems.biomedcentral.com/articles/10.1186/s12961-016-0155-7

0 Response to "Both Literature Reviews and Systematic Reviews Are Types of Research Evidence Reviews"

Post a Comment